Learn to harness the full potential of Meta's Code Llama in your coding workflow. Explore step-by-step instructions for using Code Llama directly within your favorite IDE and discover the benefits of local and cloud-based setups. Level up your coding game with Code Llama today!

In part 1, we got acquainted with the main capabilities of the model via a friendly HF interface. To see the practical use of the model, let's try to connect it to a development environment (IDE) and write some code.

There are many ready-to-use IDE extensions that you can download and start developing using your favorite Intellij Idea (one, two), VSCode (one, two, three), and so on. I’m going to use Intellij Idea.

Run the model locally

Let’s start with CodeGPT plugin.

1.Go to Settings → Plugins, find “CodeGPT“ on the Marketplace and click “Install”:

2.Once installed you need to restart the IDE → restart it to activate the plugin.

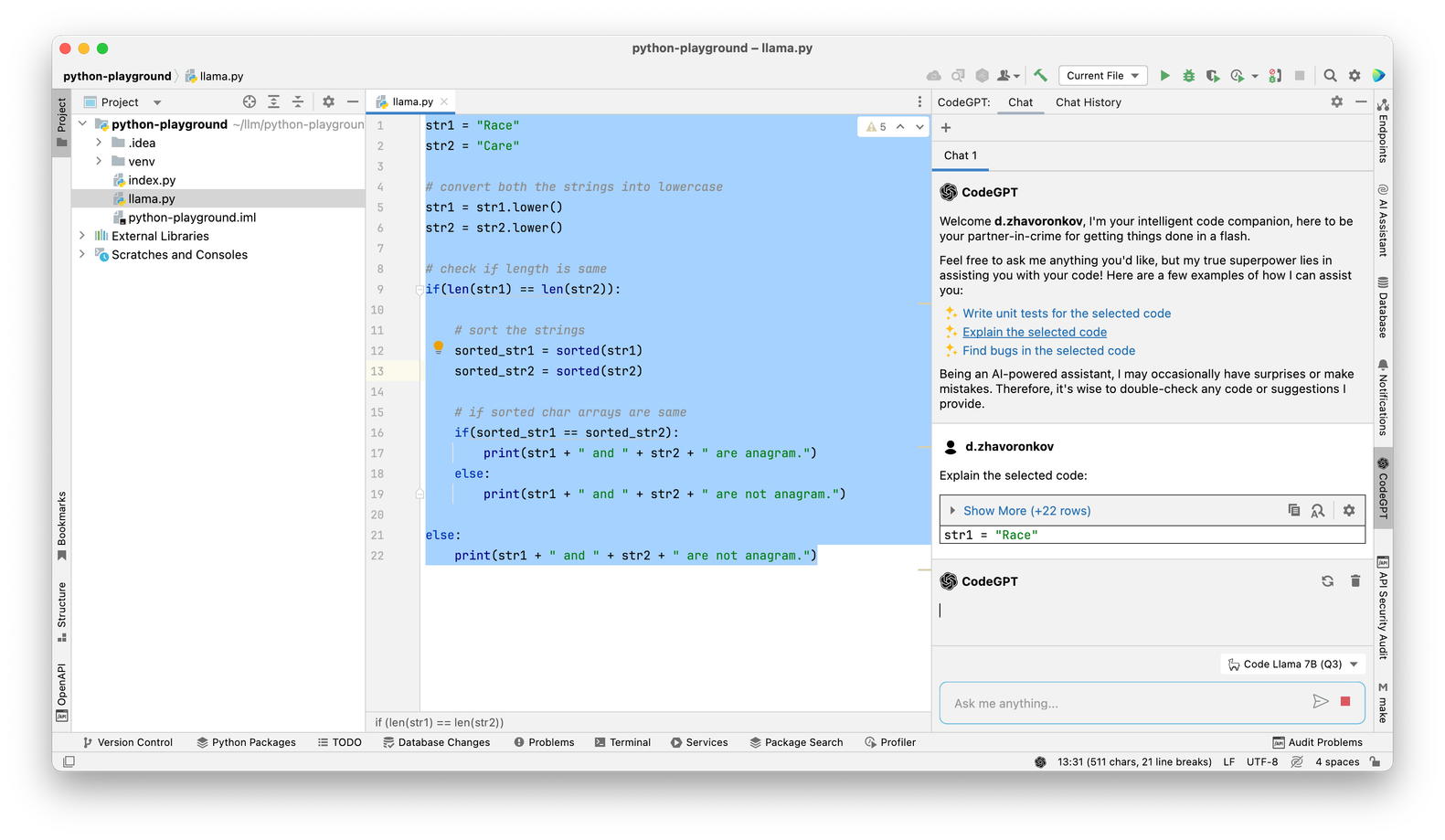

3.Once restarted you can find the Code GPT plugin tab in the IDE interface:

4.Before we start using the plugin, we need to configure it to work with Llama. Go to Settings (hotkey: cmd/ctrl + ,) → Tools → CodeGPT.

5.Firstly, you need to select Llama from the Service menu. Server should be set to “Run local server“, and model Preferences to “Use pre-defined model“. Then select Code Llama 13B and click “Download Model“ below. Once model is downloaded you can start your local server by clicking “Start server“ below.

6.Once server is started click Ok and go back to the ChatGPT tab. You can chat with this model as you usually do with ChatGPT but your asks can be more code- and programming specific thanks to Code Llama. There are some pre-defined examples in the window like “Explain the selected code“. Once clicked the model will start to review your code and respond with the explanation:

7.That’s it! Now you can use Llama locally and ask it any questions from “refactor this code“ to “write python code that sorts numbers“ and so on.

In addition to local Code Llama this plugin also supports OpenAI, Azure, and You as alternatives. Play around!

Keep in mind that this process can be very slow since the model requires good GPU resource and your computer will likely only run it on the CPU.

To get around this limitation, you can set up the model on a remote server using the “use remote server” setting but this is a big topic for a standalone article.

Let's go further and try to use the computing power of the Hugging Face inference API. For this we need another Intellij plugin.

Run the model remotely using StarCoder

Let’s utilize cloud computing power using the StarCoder plugin.

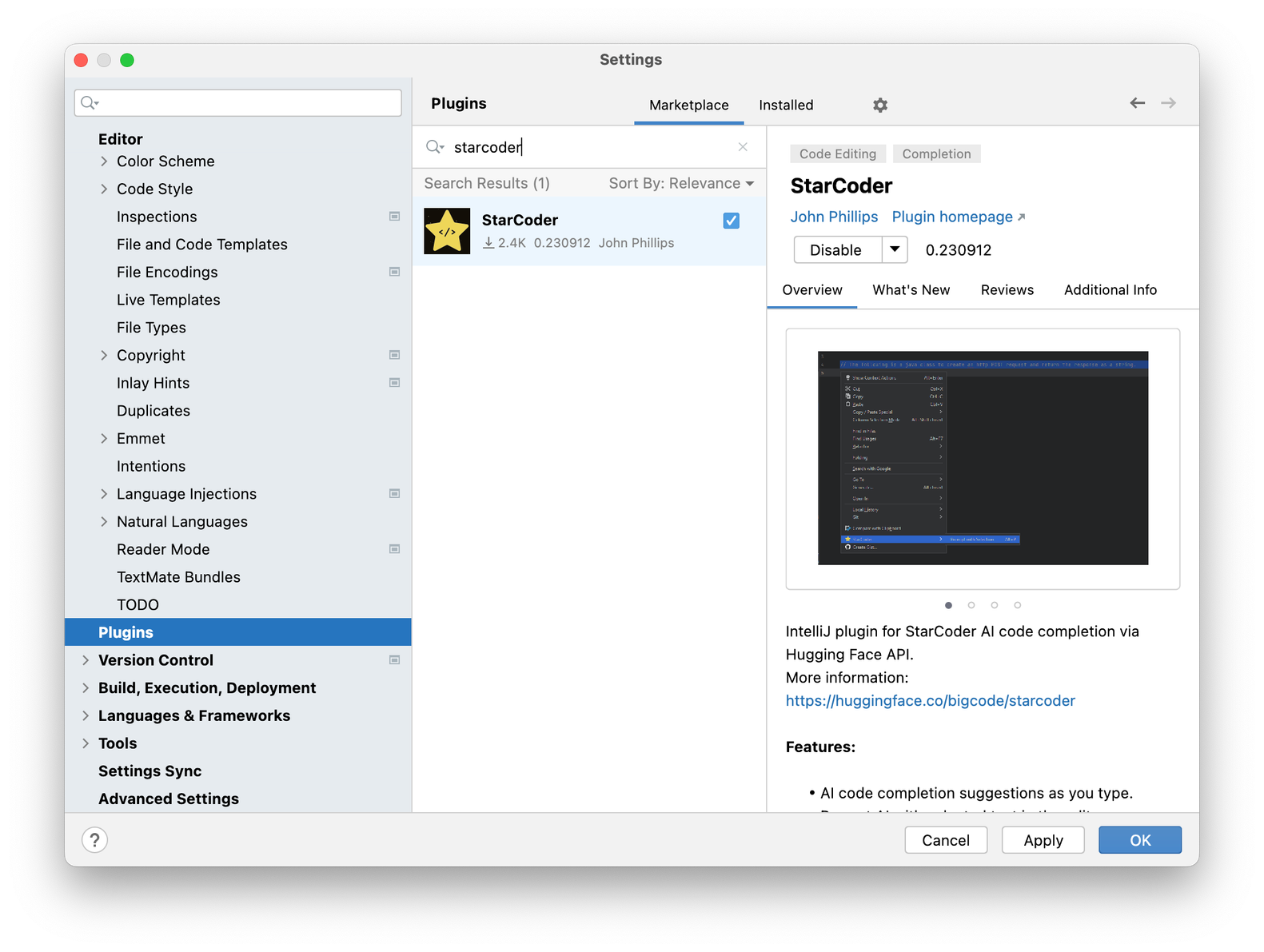

1.Go to Settings → Plugins, find “StarCoder“ on the Marketplace and click “Install”:

2.Once installed you need to restart the IDE → restart it to activate the plugin.

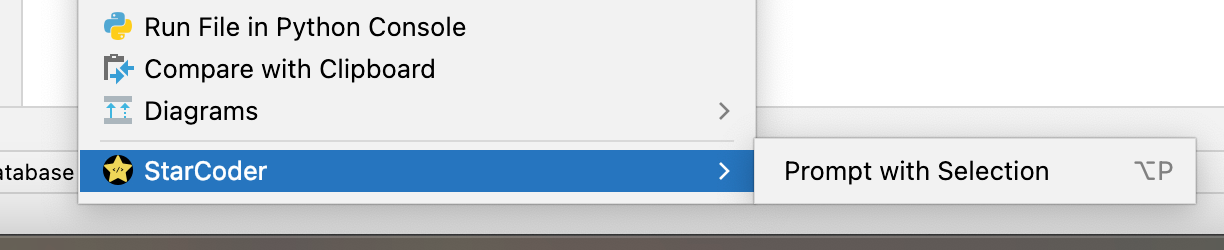

3.Once restarted you can find the StarCoder plugin tab in the context menu:

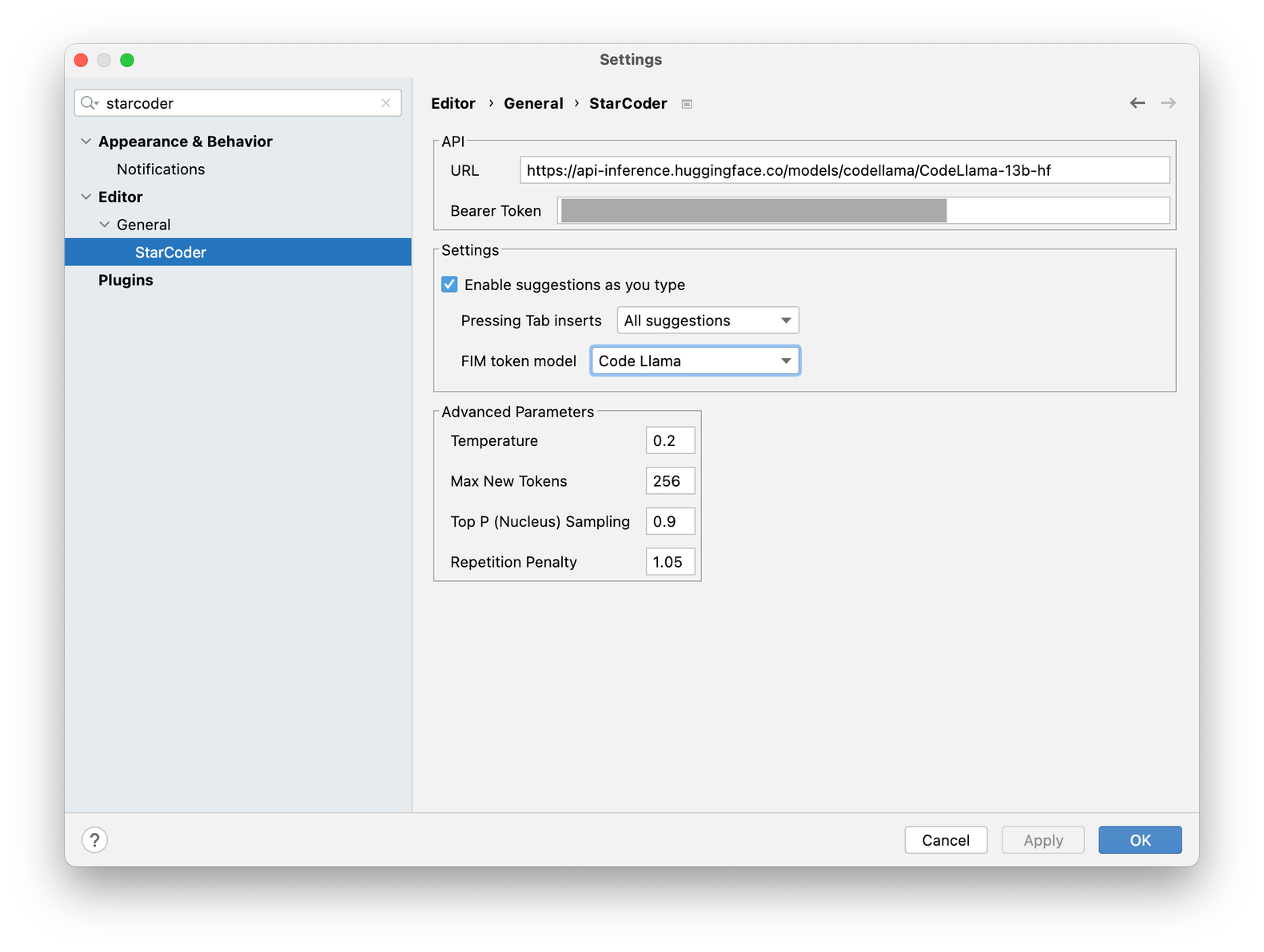

4.Before we start using the plugin, we need to configure it to work with Llama. Go to Settings (hotkey: cmd/ctrl + ,) → Editor → General → StarCoder.

5.Firstly, you need to set proper inference API URL. Since we are going to use Code Llama we need to set it to https://api-inference.huggingface.co/models/codellama/CodeLlama-13b-hf.

6.Then you need to put your Hugging Face API token in the Bearer Token input.

See how to generate and get this token in one of the previous articles: What is Hugging Face, and how do I use Huggingface. js?.

7.And the last step, you need to set the FIM token model to “Code Llama“:

8.Once ready - click OK and go back to the IDE.

This plugin works differently from the first one. It does not have a chat input interface, but you can write a request directly in a file (for example, in a comment), after which it will be sent to the model in Hugging Face.

After processing, the plugin accepts the response and inserts it into the file.

In my example, I asked the model to write code in Python that checks whether two strings are anagrams of each other. At the first request, the model produced broken code but after the second, it produced the good one.

Input: <code>write python code that checks if two string are anagram or not</code>

Output:

The StarCode interface is not as convenient and advanced as that of CodeGPT but in this case we do not need to set up the model ourselves, we just connect to the free Hugging Face API!

How to work directly with the model

You can also try to download this model locally or connect to the HF transformers API and do the same using the power of the cloud.

This model is not supported by the Transformers.js library yet so you have to use Hugging Face Python Transformers or set up it using Python on your local computer. This process is described on the official Code Llama GitHub page https://github.com/facebookresearch/codellama .

Conclusion

Meta's Code Llama stands as a powerful testament to the transformative potential of AI in the field of software development. By harnessing the model's capabilities, developers can achieve greater efficiency, enhance code quality, and gain a deeper understanding of code structures. Its open-source nature further fuels accessibility and fosters innovation within the developer community. CodeLlama undoubtedly represents a significant step forward in the evolution of AI-assisted development, empowering individuals and organizations to unlock new possibilities in the realm of software creation.

P.S. Don't forget to check out the resources below for more info and to start coding with CodeLlama:

- Meta's Code Llama webpage: https://ai.meta.com/blog/code-llama-large-language-model-coding/

- Code Llama GitHub repository: https://github.com/facebookresearch/codellama

- Hugging Face Code Llama page: https://huggingface.co/codellama

- Hugging Face Code Llama Transformers page: https://huggingface.co/docs/transformers/model_doc/code_llama

Happy coding!